I’ve been looking a lot recently at JUnit (and TestNG) tests on a code base I’m not too familiar with. In many cases I was not convinced that the tests were adequate but it took a fair bit of investigation before I could be satisfied that this was the case. I would need to look at the tests, then look at the code it’s meant to exercise, then try to work out in my head if the test covers everything it should. To make this process a bit easier, I’ve started running code coverage analysis using Emma. While this doesn’t tell me if the test is good or not, it does show me at a glance how much code is covered by the test and exactly which lines, methods and classes are missed. This is usually a good first approximation for the quality of the test case.

I’ve found Emma to be a useful tool to run after I think I’ve written my test cases and got them working. Running the test case tells me if the code being tested works. Running Emma tells me if I’ve tested enough of the code. There’s no point in having 100% test case successes if the tests themselves only exercise 50% of the code.

IDE Support

I’ve found IDE support for Emma to be a little disappointing. I’ve tried it (in order of preference) in IntelliJ IDEA Community Edition, NetBeans and Eclipse. I’ve so far managed to get it working satisfactorily only in Eclipse. However, there is the option of running it from the command line or as a Maven or Ant plugin.

IntelliJ IDEA

This is still my IDE of choice however, the Ultimate Edition (the fully featured commercial edition) is a little pricey for hobby work at home. Unfortunately, that’s the only edition with code coverage support. The Free Community Edition does not include code coverage support and I found that the Emma Code Coverage Plugin originally written for IDEA 6.0 no longer works with later versions.

NetBeans

NetBeans comes bundled with Emma however it’s disabled by default and needs to be installed from the Plugins menu. However I found that this plugin doesn’t play nicely with Maven based projects. There is apparently a workaround for this, but I’ve not been brave enough to try it.

Eclipse

I found Eclipse integration with Emma to be fairly painless using the EclEmma plugin.

Maven 2

Emma can be launched directly from Maven using the emma-maven-plugin. This can be done direct from the command line:

mvn emma:emma

or the plugin can be executed on the test phase.

org.sonatype.maven.plugin

emma-maven-plugin

1.1

report

test

emma

${project.build.sourceDirectory}

Results are generated as an HTML report.

I find it a little less convenient running it this way. I’d rather view the results in my IDE than a browser. However, this could be handy if you want to generate a report following a production build or an automated / nightly build in CruiseControl or whatever.

Interpreting reports

As an example, I’ve beefed up the error checking in my Hibernate Spanners application from my last post. I’d like to check some error conditions in one of my Hibernate DAO methods to check for nulls and non-positive spanner sizes:

public int create(Spanner spanner) {

if (spanner == null) {

throw new IllegalArgumentException

("Cannot save null spanner to database");

}

if (spanner.getSize() < 1) {

throw new IllegalArgumentException

("Cannot save spanner with non-positive size: " + 100/spanner.getSize());

}

return (Integer)getHibernateTemplate().save(spanner);

}

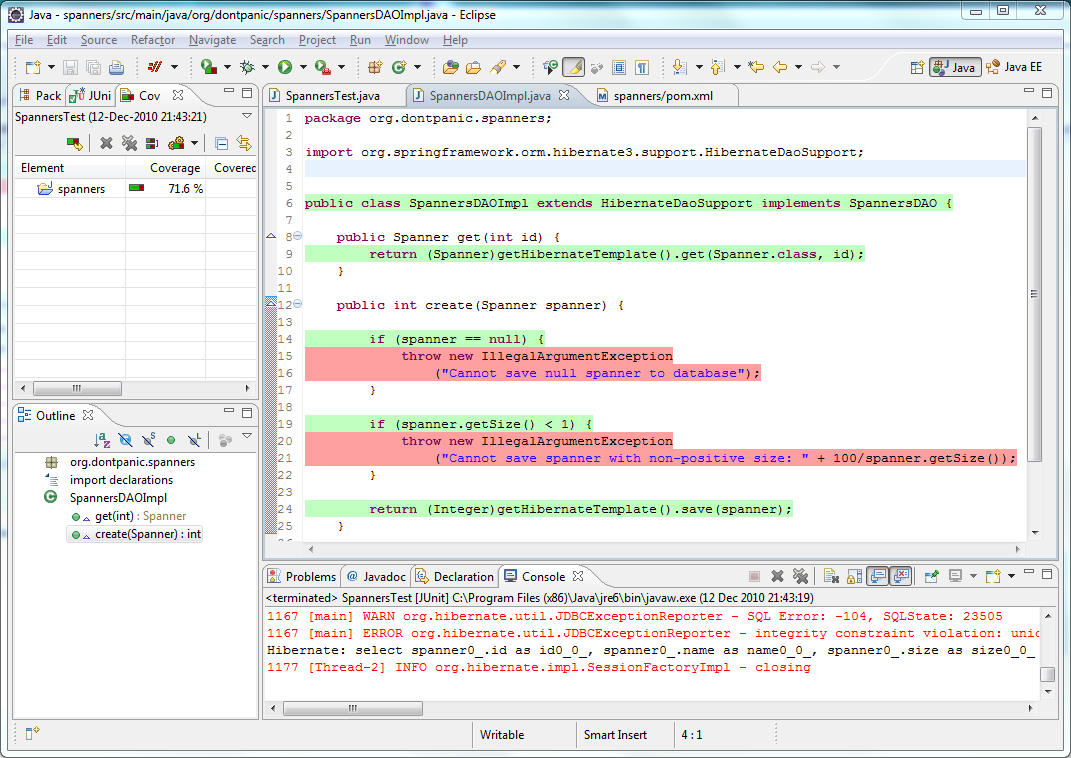

I run my unit tests from before and they show green. However, my new error checks are not exercised by the existing tests. If I run Emma, it clearly shows what I've tested and what I've not:

The green lines have been exercised by my test. Red lines have not. With this new information I may decide to extend my test suite to explicitly test these new cases.

@Test (expected = IllegalArgumentException.class)

public void testNullSpanner() {

spannersDAO.create(null);

}

@Test (expected = IllegalArgumentException.class)

public void testZeroSizeSpanner() {

// Create a spanner with zero size

Spanner kate = zeroSizeSpanner();

spannersDAO.create(kate);

}

As it happens, adding these new tests catch a bug on line 21. Attempting to add a spanner with zero size causes an ArithmeticException whent attempting to create the error message.

Now what?

Using code test coverage analysis we've got a new metric we can use to judge the quality of our code. As with any metric though, some discretion is required. Aiming for 100% test coverage is probably counter-productive. As you creep up above 80% or so you'll find you're writing tests that exercise less and less code. In the example above, I created two new tests that exercised a single line of code each. Obviously there comes a point when you have to decide that the tests are adequate.

I find that this sort of tool is mostly useful to catch dumb mistakes. When I write a test, I have a rough idea of what I think I should be testing. Code test coverage analysis does not tell me if my tests are any good or even if the coverage is adequate. But at a glance I can see what is exercised and what isn't. So if I've whole methods that I meant to test but just forgot, they'll stick out like a sore thumb.

I guess what you mean is a measure of the quality of tests. Test measure the quality of the code, coverage analysis measures the quality of your tests…great article regardless. I am using it as a reference

I guess it doesn’t work well with spring test cases. At least I have problem with it. Clover is more effective.